Visionary Codd

Table of Contents

Summary

Codd wrote extensively on different areas of computing1, but his largest impact yet is found in the field of databases. His first two relational papers (the papers) on the relational model were the most influential:

- [first paper] Derivability, Redundancy, and Consistency of Relations Stored in Large Data Banks2 (1969);

- [second paper] A Relational Model of Data for Large Shared Data Banks3 (1970);

The second paper—a revision and extension of the the first—is usually credited with being the seminal paper in the field (and the one I will focus on). These papers laid out the theoretical groundwork for the “Relational Model of Data”. I explore the model, some of its fundamental ideas, assumptions and design choices, and contrast them with modern data analysis and software engineering principles.

Caveats

-

The language and ideas expressed in the papers can often be imprecise and inconsistent—sometimes downright puzzling—yet, always intriguing and foreboding4. This makes more sense if one considers the state of affairs in 1970, in particular: i) Codd’s multidisciplinary research interests, ii) the nascent state of database technology, iii) the nascent state of software engineering, and iv) the unborn state of data science5. In short, it is advised to think of Codd as a computing visionary first, and pioneering data engineer second.

-

My observations benefit from the clarity of hindsight going back 50 years.

Vision & Blueprint

In the summary of the second paper, Codd expounds his fundamental motivation:

Future users of large data banks must be protected from having to know how the data is organized in the machine. […] Activities of users at terminals and most application programs should remain unaffected when the internal representation of data is changed. Changes in data representation will often be needed as a result of changes in query, update, and report traffic and natural growth in the types of stored information.

While this notion may appear commonplace and even cliché to modern database designers, it was incredibly original at the time. In the late 1960s there was no separation of concerns between database internals and application access, forcing the end user to have to know as much about the database as the developer.

Back then the leading technologies in database research were tree-based, thus requiring hierarchical formatting of the data prior to ingestion. Codd showed that the tree-based databases of the time were too restrictive: not all data is hierarchical, and imposing a hierarchy on non-hierarchical data introduces undue complexity in the data model—and is specially detrimental to downstream data querying and analysis.

In tree-based systems, connections between records were made with pointers; and accessing records required navigating the tree-like structure procedurally (see COBOL ). This condition ruled out any non-programmer from the possibility of directly interacting with the data.

By contrast, Codd envisioned a fully declarative language6 that could serve as a “high level retrieval language which will yield maximal independence between programs and machine representations”. The declarative approach is an enduring component of the relational model today, and by extension of any SQL implementation.

This high-level language would be part of a larger host system (whose implementation details Codd explicitly leaves out of the discussion), and used mainly to support frequent representational changes in the data—a need driven by increased “query, update, and report traffic and natural growth in the types of stored information”. In other words, Codd gave us one of the earliest definitions of the essential operations underpinning modern business intelligence 7.

He concludes the summary by presenting a high-level blueprint of the relational model:

[..] A model based on n-ary relations, a normal form for data base relations, and the concept of a universal data sublanguage [are introduced, and then] certain operations on relations are discussed and applied to the problems of redundancy and consistency in the user’s model.

Relational View of Data

Codd defines an “n-ary relation R” (i.e. a table with n columns) over sets S_1, S_2 , . . . , S_n as follows:

(1) Each row represents an n-tuple of R.

(2) The ordering of rows is immaterial.

(3) All rows are distinct.

(4) The ordering of columns is significant—it corresponds to the ordering S_1, S_2 , . . . , S_n of the domains on which R is defined (see, however, remarks below on domain-ordered and domain-unordered relations)

(5) The significance of each column is partially conveyed by labeling it with the name of the corresponding domain.

Properties (1) through (3) are straightforward and should be familiar. Properties (4) and (5) not so much—see his commentary:

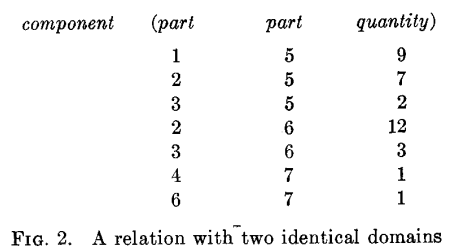

One might ask: If the columns are labeled by the name of corresponding domains, why should the ordering of columns matter? As the example in Figure 2 shows, two columns may have identical headings (indicating identical domains) but possess distinct meanings with respect to the relation. The relation depicted is called component. It is a ternary relation, whose first two domains are called part and third domain is called quantity. The meaning of component (x, y, z) is that part x is an immediate component (or subassembly) of part y, and z units of part x are needed to assemble one unit of part y. It is a relation which plays a critical role in the parts explosion problem8.

Convoluted Design

Properties (4) and (5) of the relational model constitute puzzling design choices. He acknowledged some complexity stemming from this configuration:

Users should not normally be burdened with remembering the domain ordering of any relation (for example, the ordering supplier, then part, then project, then quantity in the relation supply). Accordingly, we propose that users deal, not with relations which are domain-ordered, but with relationships which are their domain-unordered counterparts.

Despite his recommendation that users deal directly with “domain-unordered relations” (i.e. unordered columns), properties (4) and (5)—which enforce meaningful column ordering—will remain at the root of the relational model and its predicate operations (e.g. joins) for most of these (and Codd’s future) papers.

Puzzling indeed. To state the point clearly: it makes no sense to begin the development of an aspiring general theory of data management, and from the outset make its axioms subordinate to restrictive data-model properties that default to the needs of a narrow use case (i.e. the “parts explosion problem”).

In modern data analysis, meaningful column ordering is a foregone idea: it only matters for presentation and convenience, and not at all for internal operations9.

Relational Defects & Aftermath

The language surrounding the relational model is somewhat obscure, specially so for the engineer using it to build a brand new “universal data sublanguage”. CJ Date—Codd’s collaborator and fellow relational purist—carefully dissects many of the “defects” with the two papers10. Some of the imprecisions that arise:

- Unclear terminology (e.g. “domain” vs “attribute”, “relation” vs “relationship”, “atomic” vs “non-atomic”, “time-varying relationship”, “super key” vs “primary key” vs “key”);

- Missing terminology (e.g. the word “table” is not found in the paper at all);

- Awkward expressions (e.g. querying a table is “exploiting the relation”)

In Date’s words:

It’s a curious fact that, despite its title, the 1970 paper nowhere says exactly what the relational model consists of. (Actually the 1969 paper doesn’t do so either.)

In the following years Codd would continue to expand his view of the relational model, often adding more constraints and complexity. In 1985 he wrote his Twelve Rules of a DBMS , this latter work has not been nearly as influential11, and industry—driven by operational needs—would go the other way, shedding complexity and embracing simplicity.

Regardless, it was Codd who laid the foundation to the relational view of data. Today, the relational model endures in simplified form in dozens of SQL implementations. Whichever flavor of SQL one is using, the basic building blocks of the relational model at the very least imply that:

- Data is organized in tables (i.e. relations), using columns and rows (i.e. tuples).

- Tables are manipulated using relational operators.

Questionable Assumptions—Right Intuition

In the first paper, Codd stated that the relational model appeared to be “superior in several respects” to the graph or network model, however, he provided no evidence to back his claim—in fact he didn’t mention the network model again.

In the second paper he tried to correct this. Building on the concept of “data independence” (i.e. separation of concerns between application and database internals), he first critiqued the graph model and then introduced the relational model as a better alternative. Specifically, he premised the relational model on the following assumptions:

-

The network model has spawned a number of confusions, not the least of which is mistaking the derivation of connections for the derivation of relations (see remarks on the “connection trap”).

-

The relational view provides a means of describing data with its natural structure only—that is, without superimposing any additional structure for machine representation purposes. Accordingly, it provides a basis for a high level data language which will yield maximal independence [between programs and machine].

-

[A relational model] permits the development of a universal data sublanguage based on an applied predicate calculus. A first-order predicate calculus suffices if the collection of relations is in normal form.

Assumption (1) is completely misguided. It refers to an interpretation error that arises within the context of the parts-explosion problem (but isn’t really exclusive to it). Namely, if a supplier is linked to a part, and that part is linked to multiple projects, then the “connection trap” would be to conclude that a specific supplier supplies materials to all projects that use that part. Clearly, one part can be supplied by any number of suppliers—forming a many-to-one relationship—thus this conclusion is not generally correct. This misinterpretation may arise with any data model and is not intrinsic to the network model.

Assumption (2) is interesting. Storing data in its “natural” form, without “superimposing additional structure” reads almost like a prophecy for NoSQL technology. For context, NoSQL—while not clearly defined—usually refers to non-relational databases with flexible schema policies that don’t use SQL. Evidently, Codd’s natural way to store data meant the normal (tabular) form required by the relational model. The normal form, however, would later turn out to be too restrictive, and a more natural form would come with XML and JSON in the late 1990s and early 2000s.

His call for “maximal independence” between application and database was fundamental for the growth of “Large Shared Data Banks” (i.e. Integration Databases ). In an integration database, multiple applications have direct and immediate access to data reads and writes, thus facilitating interoperability between applications. But it comes at a high price: applications may have competing priorities, and schemas usually have to become larger, more general, and more complex. The need for independence and modularization is often better addressed making use of Application Databases and service-oriented architectures (SOA) . SOA allows full decoupling regardless of the data model.

Finally, Assumption (3) is the foundation for data analysis. Even if data is first collected without a normalized schema (see schema on read vs schema on write ), it will need to be normalized at some point prior to analysis—this is specially true for statistical modeling and machine learning. Normalizing data is necessary for analysis but not sufficient: subsequent work cleaning and preparing data is usually reported as taking up approximately 80% of data analysis work9.

Implementation Details & Contribution

1970’s Codd was quite aware of the complexity of the task ahead:

Many questions are raised and left unanswered. For example, only a few of the more important properties of the data sublanguage are mentioned. Neither the purely linguistic details of such a language nor the implementation problems are discussed. Nevertheless, the material presented should be adequate for experienced systems programmers to visualize several approaches. It is also hoped that this paper can contribute to greater precision in work on formatted data systems.

Codd’s imprecisions in his first two relational papers pale in comparison to the magnitude of his contribution. His vision was multidisciplinary and far-reaching, encompassing core topics in modern computing, software engineering and data analysis. His ideas were foundational in declarative programming, human-computer interaction, business intelligence and of course relational databases (and the omnipresent SQL).

Since his seminal papers, relational data stores have been the mainstay in databases, powering years of progress in information technology. Nowadays, the primary break in the field—namely the choice between SQL and NoSQL paradigms—could be traced back to him, and his call for a more “natural” representation of data.

Notes

-

Derivability, Redundancy, and Consistency of Relations Stored in Large Data Banks —Codd, 1969 ↩︎

-

A Relational Model of Data for Large Shared Data Banks —Codd, 1970 ↩︎

-

It would take roughly a decade of engineering effort by many labs to actually implement the concepts and make the technology commercially available. ↩︎

-

In 1970 SAS and SPSS were still being actively developed, and Tukey was struggling to make sense of a “data analysis” discipline as a separate area from academic statistics. Thus, I use the term “data science” in the narrower, modern definition beginning in the late 2000s. The distinction is significant because data science as we understand it today evolves directly from relational database technology and the capacity to analyze and model normalized data. ↩︎

-

The gist of declarative programming is that the user instructs the machine what to do (using natural language–like commands as much as possible), and in turn the machine figures out an optimal way to do it (e.g. by way of internal query optimization). Later in the 1970s Codd would work on Rendezvous, a prototype for a Natural Language Interface built on top of a relational database. ↩︎

-

He would later coin Online Analytical Processing in a 1993 paper. “Typical applications of OLAP include business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting, etc” ↩︎

-

“The parts-explosion problem, sometimes called bill-of-materials processing. At the heart of this problem is a recursive relationship among objects; one object contains other objects, which contain yet others. The problem is usually stated in terms of a manufacturing inventory.” Parts-Explosion Problem ↩︎

-

On the theoretical side, Codd’s work has been mostly superseded by Date and Darwen’s Third Manifesto ↩︎